Speech Filing System

How To: Orthographic and Phonetic Annotation with SFS

This document provides a tutorial introduction to the use of SFS for the orthographic and phonetic transcription of a speech recording, including tools for automatic alignment of phonetic transcription to the signal. This tutorial refers to versions 4.6 and later of SFS.

Contents

- Acquiring and annotating the audio signal

- Orthographic transcription

- Phonetic transcription

- Aligning phonetic transcription

- Verification and post-processing

- Annotation of dysfluent speech

1. Acquiring and Chunking the audio signal

Acquiring the signal

You can use the SFSWin program to record directly from the audio input signal on your computer. Only do this if you know that your audio input is of good quality, since many PCs have rather poor quality audio inputs. In particular, microphone inputs on PCs are commonly very noisy.

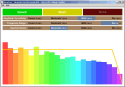

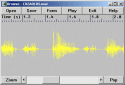

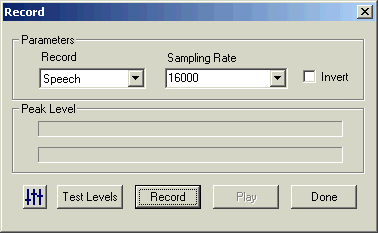

To acquire a signal using SFSWin, choose File|New, then Item|Record. See Figure 1.1. Choose a suitable

sampling rate, at least 16000 samples/sec is recommended. It is usually not necessary to choose a rate faster than 22050 samples/sec for speech signals.

Figure 1.1 - SFSWin record dialog

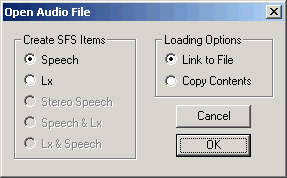

If you choose to acquire your recording into a file using some other program, or if it is already in an audio file, choose Item|Import|Speech rather than Item|Record to load the recording into SFS. If the file is recorded in plain PCM format in a WAV audio file, you can also just open the file with File|Open. In this latter case, you will be offered a choice to "Copy contents" or "Link to file" to the WAV file. See Figure 1.2. If you choose copy, then the contents of the audio recording are copied into the SFS file. If you choose link, then the SFS file simply "points" to the WAV file so that it may be processed by SFS programs, but it is not copied (this means that if the WAV file is deleted or moved SFS will report an error).

Figure 1.2 - SFSWin open WAV file dialog

Preparing the signal

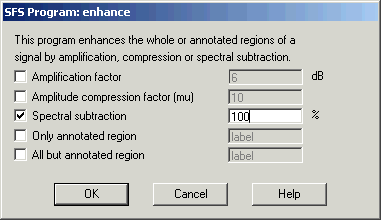

If the audio recording has significant amounts of background noise, you may like to try and clean the recording using Tools|Speech|Process|Signal enhancement. See Figure 1.3. The default setting is "100% spectral subtraction"; this subtracts 100% of the quietest spectral slice from every frame. This is a fairly conservative level of enhancement, and you can try values greater than 100% to get a more aggressive enhancement, but at the risk of introducing artifacts.

Figure 1.3 - SFSWin enhancement dialog

It is also suggested at this stage that you standardise the level of the recording. You can do this with Tools|Speech|Process|Waveform preparation, choosing the option "Automatic gain control (16-bit)".

Chunking the signal

If your audio recording is longer than a single sentence, you will almost certainly gain from first chunking the signal into regions of about one sentence in length. Chunking involves adding a set of annotations which delimit sections of the signal. The advantages of chunking include:

- it means that transcription is roughly aligned to the signal

- it makes it easier to navigate around the signal

- it improves the performance of automatic phonetic alignment

- it allows the export of a "click-to-listen" web page using VoiScript (see below)

- it allows us to use SFS annotations to store transcription, since SFS limits annotations to 250 characters

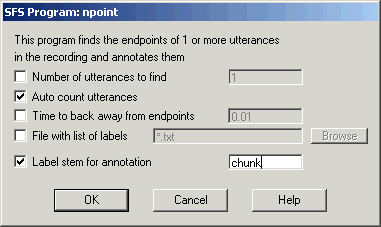

An easy way to chunk the signal is to automatically detect pauses using the "npoint" program. This takes a speech signal as input and creates a set of annotations which mark the beginning and end of each region where someone is speaking. It is a simple and robust procedure based on energy in the signal. To use this, select the speech item and choose Tools|Speech|Annotate|Find multiple endpoints. See Figure 1.4. If you know the number of spoken chunks in the file (it may be a recording of a list of words, for example), enter the number using the "Number of utterances to find" option, otherwise choose the "Auto count utterances" option. Put "chunk" (or similar) as the label stem for annotation.

Figure 1.4 - SFSWin find multiple endpoints dialog

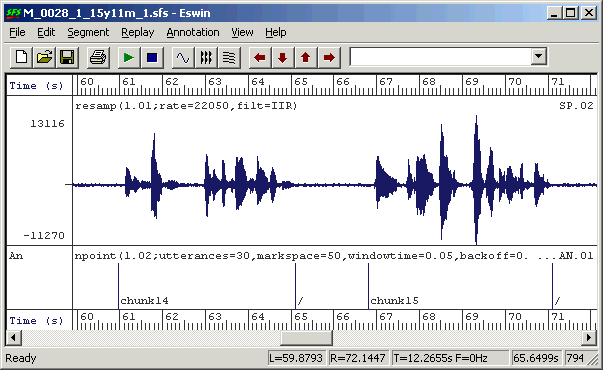

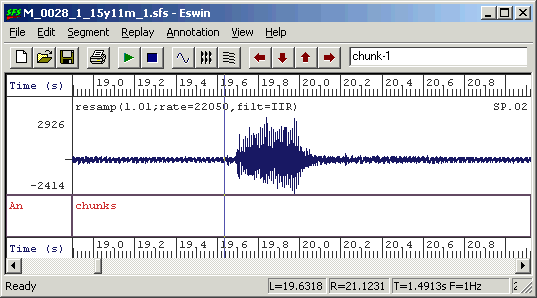

If you view the results of the chunking you will see that each spoken region has been labelled with "chunkdd", while the pauses will be labelled with "/". See Figure 1.5.

Figure 1.5 - chunked signal

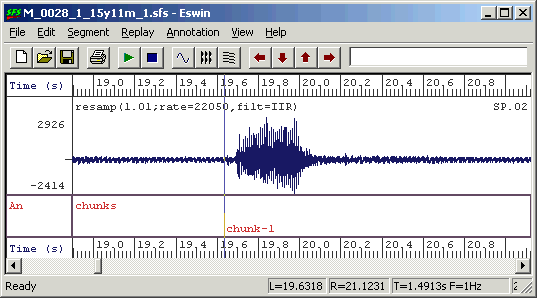

If the chunking has not worked properly, or if you want to chunk the signal by hand, you can use the manual annotation facility in eswin. To do this, select the signal you want to annotate and choose Item|Display to start the eswin program. Then choose eswin menu option Annotation|Create/Edit Annotations, and enter either a set name of "chunks" to create a new set of annotations, or enter "endpoints" to edit the set of annotations produced by npoint.

When eswin is ready to edit annotations you will see a new region at the bottom of the screen where your annotations will appear. To add a new annotation, position the left cursor at the time where you want the annotation to appear. Then type in the annotation into the annotation box on the toolbar and press [RETURN]. The annotation should appear at the position of the cursor. See Figure 1.6.

Figure 1.6 - adding an annotation in eswin

To move an annotation with the mouse, position the mouse cursor on the annotation line within the bottom annotation box. You will see that the mouse cursor changes shape into a double-headed arrow. Press the left mouse button and drag the annotation left or right to its new location. This is also an easy way to correct chunk endpoints found automatically by npoint.

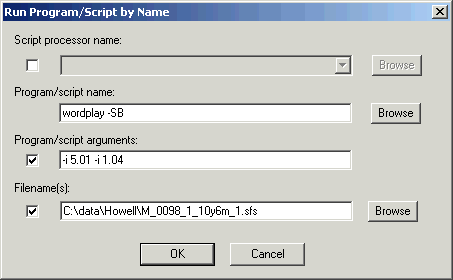

Finally, to hear if the chunking

has worked properly, you can listen to the chunked recording using the SFS wordplay program. This program is not on the SFSWin menus,

so to run it, choose Tools|Run program/script then enter "wordplay -SB" in the

"Program/script name" box. See figure 1.7. This will replay each chunk in turn, separating the chunks with a small beep.

Figure 1.7 - SFSWin run program dialog

2. Orthographic transcription

Entering orthographic transcription

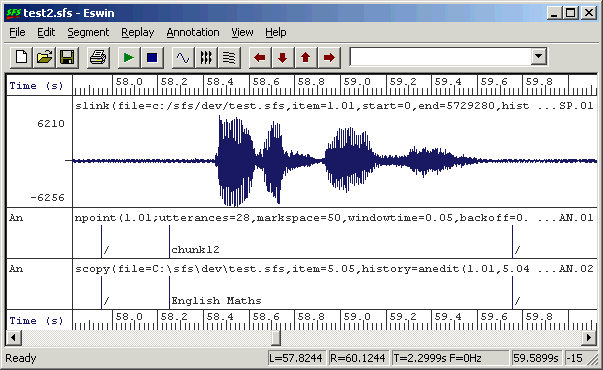

Assuming that your recording has been chunked into sentence-sized regions, the process of orthographic transcription is now just the process of replacing the "chunk" labels with the real spoken text. The result will be a new annotation item in the file, but where each annotation contains the orthographic transcription of a chunk of signal. See Figure 2.1.

Figure 2.1 - Speech chunks and orthographic transcription

You can edit annotation labels using the eswin display program, but it is not

very easy - you have to overwrite each annotation label with the transcription. A much easier way is to use the anedit annotation label editor program. This program allows you to listen to the individual annotated regions and to edit

the labels of annotations without affecting their timing.

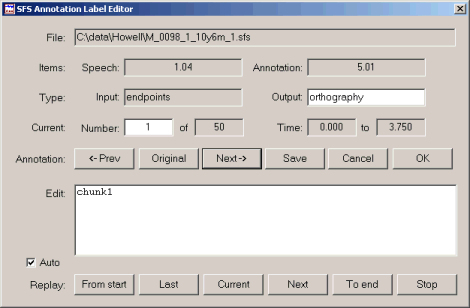

To run anedit, select a speech item and the annotation item containing the chunks and choose Tools|Annotations|Edit Labels. Since you are mapping one set of annotations into another, change the "output" annotation type to "orthography". See Figure 2.2.

Figure 2.2 - anedit window

The row of buttons in the middle of the annotation editor window control the set of annotations:

- ←Prev

- Move to previous annotation in the list.

- Original

- Reload the original annotation label at this position.

- Next→

- Move to next annotation in the list.

- Save

- Save the changes you've made so far to the SFS file.

- Cancel

- Forget annotation label changes and exit.

- OK

- Save annotation label changes and exit.

The row of buttons at the bottom of the annotation editor window control the replay of the speech signal:

- From start

- Replay from the start of the file to end of the current annotated region.

- Last

- Replay from the start of the last annotated region to the end of the current annotated region.

- Current

- Replay the current annotated region.

- Next

- Replay from the start of the current annotated region to the end of the next annotated region.

- To end

- Replay from the start of the current annotated region to the end of the file.

- Stop

- Stop any current replay.

The "Auto" replay feature causes the current annotated region to be replayed each time you change to a different annotation.

To use anedit for entering orthographic transcription, first check that the "Auto" replay feature is enabled and that you are positioned at the first chunk of speech. Replay this with the "Current" button, select and over-write the old label with the text that was spoken. Then press the [RETURN] key. Two things should happen: first you should move on to the next chunk in the file and second that chunk of signal should be replayed. You can now proceed through the file, entering a text transcription and pressing [RETURN] to move on to the next chunk.

If you need to hear the signal again, use the buttons at the bottom of the screen. Every so often I suggest you save your transcription back to the file with the "Save" button. This ensures you will not lose a lot of work should something go wrong.

One word of warning: at present SFS is limited to annotations that are less than 250 characters long. Anedit prevents you from entering longer labels. There is no limit to the number of labels however.

Conventions

It is worth thinking about some conventions about how you enter transcription. For example, should you start utterances with capital letters, or terminate them with full stops? Should you use punctuation? Should you use abbreviations and digits? Should you mark non-speech sounds like breath sounds, lip smacks or coughs?

Here is one convention that you might follow, which has the advantage that it is also maximally compatible with SFS tools.

- put all words except proper nouns in lower case

- do not include any punctuation

- spell out all abbreviations and numbers, i.e. "g. c. s. e." not "GCSE", "one hundred and two" not "102", "ten thirty" not "10:30".

- mark non-speech sounds in a special way, e.g. "[cough]"

For pause regions you can either choose to label these using a special symbol of your own (e.g. "[pause]"), or leave them annotated as "/", or label them with the SAMPA symbol for pause which is "...".

Making a clickable script

Once you have a chunked and transcribed recording you can distribute your transcription as a "clickable script" using the VoiScript program (available for free download from http://www.phon.ucl.ac.uk/resource/voiscript/). The VoiScript program will display your transcription and replay parts of it in response to mouse clicks on the transcription itself. This makes it a very convenient vehicle for others to listen to your recording and study your transcription.

VoiScript takes as input a WAV file of the audio recording and an HTML file containing the transcription coded as links to parts of the audio. Technical details can be found on the VoiScript web site. To save your recording as a WAV file, choose Tools|Speech|Export|Make WAV file, and enter a suitable folder and name for the file. The following SML script can be used to create a basic HTML file compatible with VoiScript:

/* anscript.sml - convert annotation item to VoiScript HTML file */

/* takes as input file.sfs and outputs HTML

assuming audio is in file.wav */

main {

string basename

var i,num

i=index("\.",$filename);

if (i) basename=$filename:1:i-1 else basename=$filename;

print "<html><body><h1>",basename,"</h1>\n";

num=numberof(".");

for (i=1;i<=num;i=i+1) if (compare(matchn(".",i),"/")!=0) {

print "<a name=chunk",i:1

print " href='",basename,".wav#",timen(".",i):1:4

print "#",(timen(".",i)+lengthn(".",i)):1:4,"'>"

print matchn(".",i),"</a>\n"

}

print "</body></html>\n"

}

|

Copy and paste this script into a file "anscript.sml". Then select the annotation item you want to base the output on and choose Tools|Run SML script. Enter "anscript.sml" as the SML script filename and the name of the output HTML file as the output listing filename in the same directory as the WAV audio file.

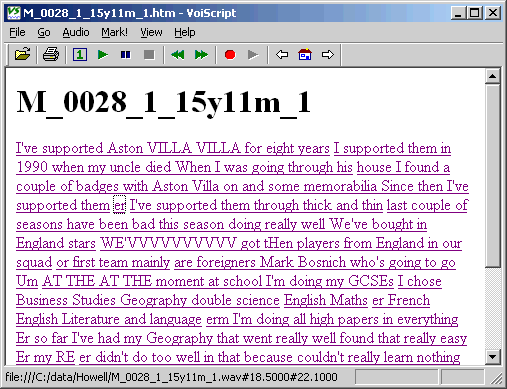

If you now open the output HTML file within the VoiScript program, you will be able to read and replay parts of the transcription on demand, see Figure 2.3.

Figure 2.3 - example VoiScript clickable script

3. Phonetic transcription

Spelling to sound

We now have a chunked orthographic transcription of our recording roughly aligned to the audio signal. The next stage is to translate the orthography for each chunk into a phonetic transcription. If we know the language, this is a largely mechanical procedure of looking up words in a dictionary. If the language is English, the mechanical part of the process can be performed by the antrans program.

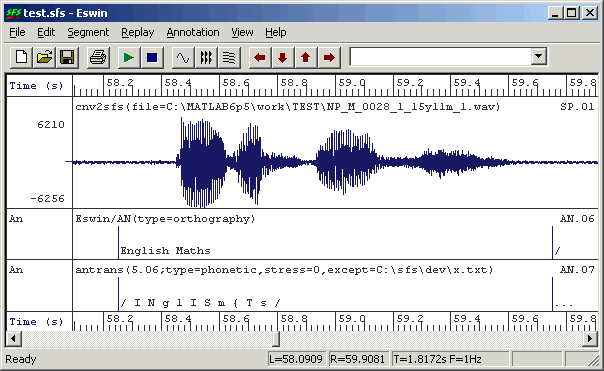

The SFS program antrans performs the phonetic transcription of orthography using a built-in English pronunciation dictionary. The program takes orthographic annotations as input and produces transcribed annotations as output, in which only the content of the labels has been changed. See Figure 3.1

Figure 3.1 - Transcribed annotations

It will almost certainly be the case that antrans will do an imperfect job in any real situation, since:

- it only produces a single pronunciation for each word, and that may not be the pronunciation used by the speaker.

- it may not know all the words used: although it has a large dictionary, it cannot know all names, places and abbreviations.

- it does not take into account any possible or actual contextual changes to pronunciation, such as assimilations and elisions.

- it can only guess that each chunk begins and ends in silence.

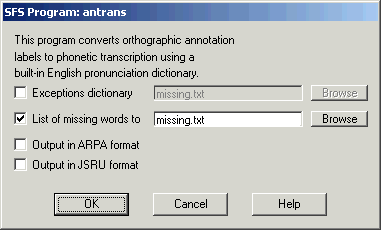

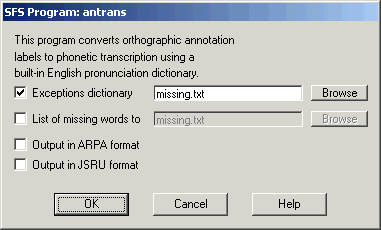

To run antrans, select the input annotation item and choose Tools|Annotation|Transcribe labels.

The first time this is run, collect a list of words that antrans doesn't know by using the 'Missing word list' option, see Figure 3.2. After the program has run, edit the word list (in Windows notepad for example) and add a transcription to each word, saving the resulting file as an exceptions list. This can then be incoporated in a second run of antrans (you can delete the output of the first run), see Figure 3.3.

Figure 3.2 - SFSWin Transcribe labels dialog (1)

Figure 3.3 - SFSWin Transcribe labels dialog (2)

The format of the exceptions file is as follows. It is a text file where each line is the pronunciation of a single word. A word is a sequence of printable characters that do not contain a space. The spelling of the word is followed by a TAB character, and then the transcription follows in SAMPA notation. It is usually not necessary to separate the SAMPA segment symbols with spaces, but it does not do any harm. Include stress symbols only if you intend to use them later. Here is an example:

1990 naInti:n naIntI

Bosnich bQznItS

MATHSSSSSSS m{Ts

WE'VVVVVVVVVV wi:v

|

A simple way to correct the transcription is to use the anedit program again, just as we did for entering orthographic transcriptioj in section 2.

Transcription systems

The SFS tools are designed to work with the SAMPA transcription system by default, but antrans can also use transcriptions in ARPA and JSRU systems. The table below gives a comparison of the symbol systems with the IPA.

|

|

In addition, the following symbols are used to mark stress and silence:

| IPA | Description | SAMPA | ARPA | JSRU |

|---|---|---|---|---|

| ˈ | primary stress | " | " | |

| ˌ | secondary stress | % | ' | |

| silence | / | sil | q | |

| pause | ... | sil | q |

4. Aligning phonetic transcription

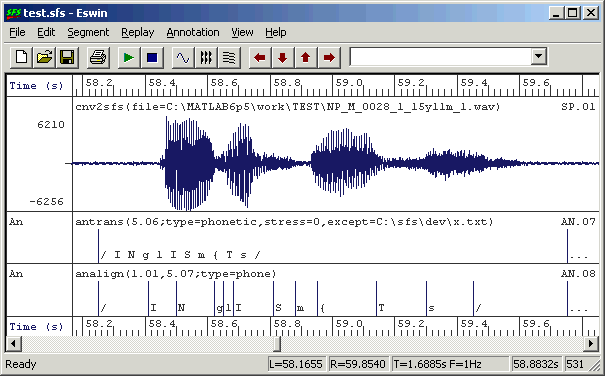

At this point we have a chunked phonetic transcription: each spoken chunk of the signal is annotated with a unit of phonetic transcription. The next stage is to break up the transcription into individual segment labels and roughly align the labels to the signal.

See Figure 4.1.

A basic level of alignment can be performed by the SFS analign program.

Figure 4.1 - Chunked vs. aligned transcription

Automatic alignment

Analign has two modes of operation. In the first mode, input is a set of transcribed chunks in which the start and end points of the chunks are fixed. The program then finds an alignment between the segments in the transcription and the signal region identified by the chunk. In the second mode, the program chooses chunks on the basis of pause labels, and all phonetic annotations between the pauses are realigned. By default pauses are identified by labels containing the SAMPA pause symbol "...". You can use the first mode to get a basic alignment, then you can use the second mode to refine the alignment by adding or deleting phonetic annotations and re-running analign.

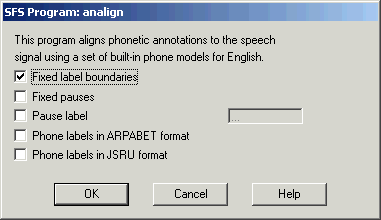

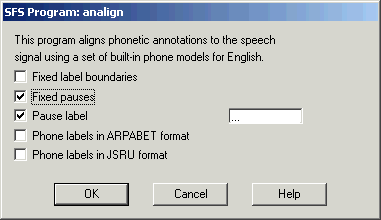

To align chunked phonetic transcription, select the input speech and annotation items and choose Tools|Annotation|Auto-align phone labels. Choose option "Fixed label boundaries" to only perform alignment within a label. See Figure 4.2.

Figure 4.2 - SFSWin Align Labels dialog (1)

The automatic alignment is performed using a set of phone hidden-Markov models which have been trained on Southern British English. You may need to replace these for other languages and accents. Look at the manual page of analign for details. The HMMs that come with SFS have been built using the Cambridge hidden Markov modelling toolkit HTK.

Automatic alignment is an approximate process, and you will almost certainly see places in the aligned transcription where the alignment is not satisfactory. Common kinds of problems are:

- Segments stretched over unmarked pauses.

- Segments compressed when smoothed or elided in rapid speech

- Poor alignment in consonant clusters and unstressed syllables in rapid speech.

- Poor identification of speech-to-silence boundaries

- Poor alignment for syllable-initial glides and syllable-final nasals.

You can either correct the alignment manually or you can make changes to the transcription and run analign again. We'll describe these in turn.

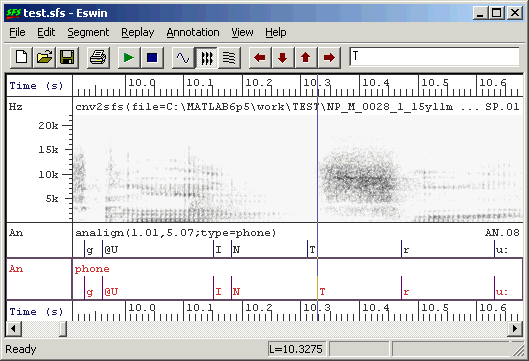

Manual editing of transcription alignment

To edit a set of annotations, select a speech signal and the annotations to be edited and choose Item|Display. The waveform and the input annotations are displayed within SFS program eswin. Then right-click with the mouse in the box at the left of the annotations and choose menu option "Edit annotations". An editable copy of the set of annotations will then appear at the bottom of the screen.

eswin has a number of special facilities to help in the correction of annotation alignments. To demonstrate these, zoom into a region of the signal so that individual annotations are clearly visible. Then click the left mouse button to display the vertical cursor. You will then find that:

- the left and right arrow keys [←] and [→] shift the left cursor one pixel to the left and right.

- pressing [Ctrl] together with the arrow keys will cause the left cursor to jump from one annotation to the previous/next annotation. The annotation label is also copied into the annotation edit box.

- with the left cursor on an annotation, pressing [Shift] together with the arrow keys will slide the annotation one pixel left and right.

- with the left cursor on an annotation, pressing the [Delete] key will delete an annotation.

You can also delete an annotation by deleting the contents of the annotation edit box and pressing [Return] while the cursor is positioned on an annotation. Figure 4.3 shows an annotation being moved using the arrow keys.

Figure 4.3 - Manual editing of annotations

Semi-automatic alignment correction

If the automatic alignment has failed for fairly obvious reasons, it may be more efficient to redo the alignment with the problem fixed than to reposition every annotation manually. For example, a common problem is a failure to mark short pauses that occur within utterances. It is easy to add these pauses as new annotations (with "/" symbols) and to re-do the automatic alignment.

Because we have aligned the transcription once, we do not want analign to preserve the current annotation label boundaries. Instead we probably want to preserve the position of major pauses in the transcription (marked with "..." symbols). To re-do the alignment this way, select the speech signal and the edited aligned annotations and choose Tools|Annotations|Auto-align phone labels, but choosing option "Fixed pauses", see Figure 4.4. If you use a different symbol to "..." for pauses, enter the symbol as the "Pause label" parameter.

Figure 4.4 - SFSWin Align Labels dialog (2)

5. Verification and Post-processing

One of the final steps in annotating a signal is to verify that the annotation labels match your normal conventions for labelling. For example, you may want to check that only labels from a given inventory are present. Another step in the final processing may be to collapse adjacent silences/pauses into single labels.

These kinds of operation can be most easily performed with an SML script. We will present two scripts: the first checks labels against an inventory stored in a file, the second collapses sliences and pauses.

Verification

We assume that an inventory of symbols is saved in a text file with one symbol per line. The following script then reports the name and location of all symbols not in the inventory.

/* anverify - verify annotation labels come from known inventory */

/* inventory */

file ip;

string itab[1:1000];

var icnt;

/* load inventory from file */

init {

string s;

openin(ip,"c:/sfs/dev/sampa.lst");

input#ip s;

while (compare(s,s)) {

icnt = icnt+1;

itab[icnt] = s;

input#ip s;

}

close(ip);

}

/* process an annotation item */

main {

var i,num;

num = numberof(".");

for (i=1;i<=num;i=i+1) {

if (!entry(matchn(".",i),itab)) {

print $filename," ";

print timen(".",i):8:4," ";

print matchn(".",i)," - illegal symbol\n";

}

}

}

|

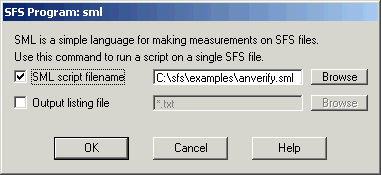

To run this script, copy and paste it into a file "anverify.sml" and create the inventory file "sampa.lst". Then select the annotation item to check and run Tools|Run SML script, see Figure 5.1.

Figure 5.1 - SFSWin Run SML script dialog

Post-processing

In this script we collapse adjacent annotations if they both label silence or pause. Specifically:

| First | Second | Result |

|---|---|---|

| ... | ... | ... |

| ... | / | ... |

| / | ... | ... |

| / | / | / |

The processed annotation item is saved back into the same file.

/* ansilproc - collapse adjacent silence annotations */

item ian; /* input annotations */

item oan; /* output annotations */

/* check annotation for silence */

function var issil(lab)

string lab

{

if (compare(lab,"/")==0) return(1);

if (compare(lab,"...")==0) return(1);

return(ERROR);

}

main {

var i,j,numf;

var size,cnt;

string lab,lab2;

/* get input & output */

sfsgetitem(ian,$filename,str(selectitem(AN),4,2));

numf=sfsgetparam(ian,"numframes");

sfsnewitem(oan,AN,sfsgetparam(ian,"frameduration"),

sfsgetparam(ian,"offset"),1,numf);

/* process annotations */

i=0;

cnt=0;

while (i < numf) {

lab = sfsgetstring(ian,i);

if ((i<numf-1) && issil(lab)) {

/* is a non-final silence */

size=sfsgetfield(ian,i,1);

j=i+1;

lab2 = sfsgetstring(ian,j);

while ((j<numf) && issil(lab2)) {

if (compare(lab2,"...")==0) lab = lab2;

size=size + sfsgetfield(ian,j,1);

j=j+1;

if (j<numf) lab2 = sfsgetstring(ian,j);

}

sfssetfield(oan,cnt,0,sfsgetfield(ian,i,0));

sfssetfield(oan,cnt,1,size);

sfssetstring(oan,cnt,lab);

i=j;

}

else {

/* final or non-silence, just copy */

sfssetfield(oan,cnt,0,sfsgetfield(ian,i,0));

sfssetfield(oan,cnt,1,sfsgetfield(ian,i,1));

sfssetstring(oan,cnt,lab);

i=i+1;

}

cnt = cnt + 1;

}

/* save result */

sfsputitem(oan,$filename,cnt);

}

|

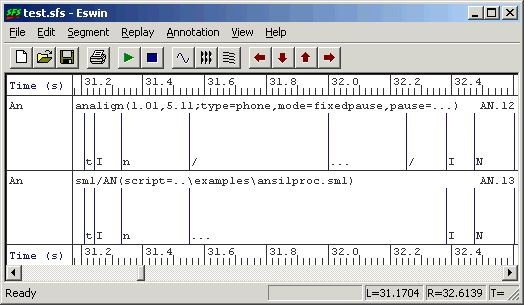

Copy and paste this script into ansilproc.sml, and run it using Tools|Run SML script. An example of the effect of the script is shown in Figure 5.2

Figure 5.2 - Post-processing of silences

6. Annotation of dysfluent speech

This section refers explicitly to annotated recordings of dysfluent speech made available by the Speech Group of the Department of Psychology at UCL (www.psychol.ucl.ac.uk).

Description of UCL Psychology phonetic annotation system

Below is a summary of the phonetic mark-up developed by the Speech group and used on the dysfluent speech database. The basic phonetic symbol set is the JSRU symbol set described in section 3.

Word Boundaries

Word boundaries are indicated in the phonetic transcription with a symbol placed before the first syllable in the word:

- A forward slash "/" is used to mark a function Word

- A colon ":" is used to mark a content Word

Function words are closed class words (only about 300 in English) which perform grammatical functions while content words are open class words which carry meaning.

| Function Words | |

|---|---|

| Prepositions: | of, at, in, without, between |

| Pronouns: | he, they, anybody, it, one |

| Determiners: | the, a, that, my, more, much, either, neither |

| Conjunctions: | and, that, when, while, although, or |

| Modal verbs: | can, must, will, should, ought, need, used |

| Auxilliary verbs: | be (is, am, are), have, got, do |

| Particles: | no, not, nor, as |

| Content Words | |

| Nouns: | John, room, answer, Selby |

| Adjectives: | happy, new, large, grey |

| Full verbs: | search, grow, hold, have |

| Adverbs: | really, completely, very, also, enough |

| Numerals: | one, thousand, first |

| Interjections: | eh, ugh, phew, well |

| Yes/No answers: | yes, no (as answers) |

Beware that the same lexical word can function as either content or function word depending on its function in an utterance:

- have

- "I have come to see you" = Function Word (Auxillary)

- "I have three apples" = Content Word (Full Verb)

- one

- "One has one's principles" = Function Word (Pronoun)

- "I have one apple" = Content Word (Numeral)

- no

- "I have no more money" = Function Word (Negative Particle)

- "No. I am not coming" = Content Word (Yes/No Answer)

Examples with the word boundary markers:

- "I saw him in the school." = /ie :saw /him /in /dha :skuul.

- "I have come to see you." = /ie /haav :kam /ta :see /yuu.

Syllable Boundaries

The appropriate stress marker from the list below is placed at the start of each syllable, to mark syllable boundaries as well as stress:

- Exclamation mark ! prior to emphatically-stressed syllable

- Double quote " prior to primary-stressed syllable

- Single quote ' prior to secondarily-stressed syllable

- Hyphen - prior to unstressed syllable not in word-initial position

In the case of a word-initial syllable, the stress marker is positioned immediately after the word marker. The only exception is in the case of an unstressed first syllable, which does not receive a dash but instead only receives the word marker. The dash, by default, indicates that a syllable is not word initial, as well as indicating that it is unstressed.

Examples:

- :"sen-ta (centre)

- :di"tekt (detect)

- :'in-ta"naa-sha-nl (international)

- :"in-ta'naa-sha-na-lie-zai-shn (internationalization)

- :in-ta'naa-sha-na-lie"zai-shn (internationalization)

Marking dysfluencies within words

All dysfluent phones are entered in UPPER-CASE at a finer-grained level of transcription wherein each upper-case symbol represent 50ms duration-estimates.

Multiple upper-case phones may be represented with an explicit repetition count: {x num}, e.g. if the duration of a prolonged F were 5 times 50ms, it could be transcribed either as "FFFFF" or F{x 5}. The latter is helpful in transcribing very long prolongations like F{x 30}.

A "Q" is used to indicate a pause within a word of 100ms, e.g. a 300ms dysfluent pause would be transcribed as either QQQ or Q{x 3}.

Examples:

- Prolongations, e.g. /dhaats :FFFFFaan"taa-stik or /dhaats :F{x 5}aan"taa-stik.

- Repetitions, e.g. dhaats /a :"load /av :"BA BA BA BA Bawl-da'daash or /dhaats /a :"load /av :"KQ KQ KQ Ko-bl-az.

Note that a space does not indicate any pausing. In the first repetition example, there is no pause between the repetitions of the "BA" sound. There are, however, brief pauses (100ms) between the "K" sounds in the second example

For ambiguous phonetic transcription sequences the {x…} convention is used when the symbol is repeated, e.g. the transcription "AAAAA" refers to a prolonged "A", but "AA{x5}" refers to a prolonged "AA".

Other dysfluencies which cannot be transcribed are entered in the form of a comment at the place where it occurs. For example, a block can be entered as {U block}. All dysfluencies are marked in the phonetic transcriptions.

Marking of supralexical dysfluencies

Word repetitions are transcribed using the syllable or word repetition convention described below (++|++), with the exception that a monosyllabic word that is repeated with no pausing, or very little, and is judged to be 'stuttered' can be transcribed within one word, thus:

- /AAND /aand or /AANDQQ/aand

Any repeated monosyllabic words that are separated by significant pausing (more than two Q) are transcribed using the convention below.

In the transcribed speech, the section of "replaced" and "replacement" speech are enclosed by two "+" signs and the two sections are separated by a vertical bar "|". For example:

- Syllable repetition:

/dhei /waz :"noa + :"ree Q + | + :"ree + -zan /fa /him ta :"duu /it.

- Word repetition:

/dheiz :"noa :"u-dha + /dhat + | + /dhat + /ie :'noa /ov.

- Backtracking:

/dheiz + :"noa :"u-dha /dhat Q + | + :"noa :"u-dha /dhat + /ie :'noa/ov.

- Backtracking + elaboration:

/dheiz + :"noa :"u-dha /dhat Q + | + :"noa :"u-dha :'i-di-at /dhat + /ie :'noa /ov.

Marking of pauses between words

Pauses are marked with lower-case "q" if they are part of fluent speech intonation. Dysfluent pauses are marked with upper-case "Q".

Marking of other comments

Other comments by the transcriber are entered into the transcription using the convention {U ...text...}. This might be used for speech that could not be transcribed or for other sound events.

Division into tiers

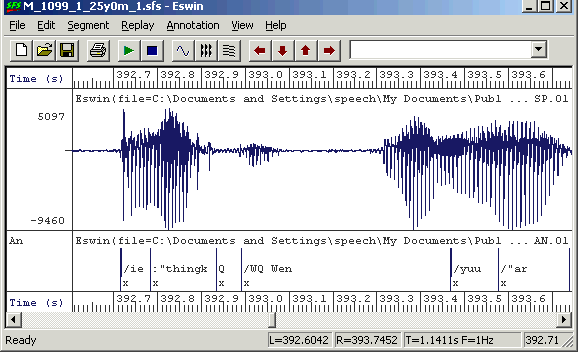

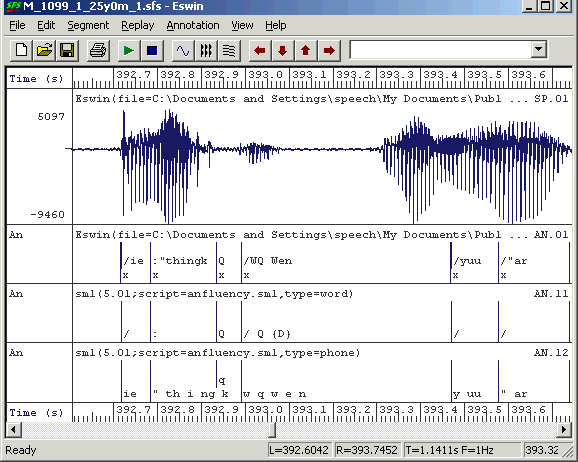

In this section we will look at how the dysfluent transcription may be divided into two tiers: the first decribing the word and dysfluency events, the second describing the phonetic sequence. The advantage of this separation is that the phonetic symbol sequence may then be time aligned with the speech signal. Figure 6.1 shows an example of the database annotation prior to processing.

Figure 6.1 - Example dysfluency mark-up before processing

The following script takes as input an annotation item marked up using the system above which has been time-aligned at the level of individual syllables, as can be seen in Figure 6.1. The output of the script is two further annotation sets. The basic principle of operation is that the input transcription is parsed symbol by symbol and some symbols are directed into the word tier and some into the phone tier. In addition, redundant annotations that only mark the ends of syllables (and are less than about 5ms long) are removed.

The marking of dysfluency is changed so that conventional phonetic annotation is used in the phone tier, and a new marker "{D}" is added to the word tier. This allows us to process the phone tier using the automatic alignment procedure described in section 4.

At present no processing of "multiplier" markers is performed, so that "AA{x 5}" is divided up into "aa" on the phone tier and "{x 5} {D}" on the word tier.

/* anfluency - process phonetic annotations used on fluency data */

/* Mark Huckvale - Univserity College London */

/* version 1.0 - June 2004

*

* This script take a set of phonetic annotations

* from the UCL Psychology Speech Group fluency

* database and normalises them to be consistent

* with SFS conventions.

*

* Input is transcription of syllables or words

* in JSRU format with additional markers showing

* word category and dysfluency

*

* Output is two new annotation sets: one containing

* only the phonetic labels and stress markers, parsed

* with spaces between the symbols; and one with the

* word category and dysfluency mark-up

*

*/

item ian; /* input annotations */

item oanp; /* output annotations - phonetic */

var oanpcnt;

item oanw; /* output annotations - word */

var oanwcnt;

/* check for uppercase */

function var isupper(str)

{

string str;

if ((ascii(str)>=65)&&(ascii(str)<=90)) return(1);

return(ERROR);

}

/* convert to lower case */

function string tolower(src)

{

string src;

string dst;

var i;

dst="";

for (i=1;i<=strlen(src);i=i+1) {

if ((ascii(src:i:i)>=65)&&(ascii(src:i:i)<=90)) {

dst = dst ++ char(ascii(src:i:i)+32);

}

else {

dst = dst ++ src:i:i;

}

}

return(dst);

}

/* check next character for digraph */

function string checknext(prefix,ch,label)

string label;

{

string prefix,ch;

if (strlen(label)==0) return(prefix);

if (index(ch,label:1)==1) {

prefix=prefix++(label:1);

label=label:2:strlen(label);

}

return(prefix);

}

/* strip next symbol from front of string */

function string nextsymbol(label)

string label;

{

string c;

string l;

var idx;

while (1) {

/* strip off first character */

if (strlen(label)==0) return("");

if (strlen(label)==1) {

c=label;

label="";

}

else {

c=label:1;

label=label:2:strlen(label);

}

/* action based on character */

switch (c) {

case " ": { /* skip */ }

case "_": { /* skip */ }

case "~": { /* skip */ }

case "/": return(c);

case ":": return(c);

case "(": { /* dysfluency mark-up */

/* these should probably be mapped to {} */

idx=index("\)",label);

if (idx) {

l=c++(label:idx);

label=label:idx+1:strlen(label);

return(l);

}

else {

l=c++label;

label="";

return(l);

}

}

case "{": { /* dysfluency mark-up */

idx=index("}",label);

if (idx) {

l=c++(label:idx);

label=label:idx+1:strlen(label);

return(l);

}

else {

l=c++label;

label="";

return(l);

}

}

pattern "[aA]": return(checknext(c,"[airwAIRW]",label));

pattern "[cC]": return(checknext(c,"[hH]",label));

pattern "[dD]": return(checknext(c,"[hH]",label));

pattern "[eE]": return(checknext(c,"[eiryEIRY]",label));

pattern "[gG]": return(checknext(c,"[xX]",label));

pattern "[iI]": return(checknext(c,"[aeAE]",label));

pattern "[nN]": { /* special processing for sequence "ngx" => "n gx" */

l = checknext(c,"[gG]",label);

if ((compare(l,"ng")==0)&&(compare(label:1,"x")==0)) {

/* mis-parse ngx */

label="g"++label;

return("n");

}

return(l);

}

pattern "[oO]": return(checknext(c,"[aiouAIOU]",label));

pattern "[sS]": return(checknext(c,"[hH]",label));

pattern "[tT]": return(checknext(c,"[hH]",label));

pattern "[uU]": return(checknext(c,"[ruRU]",label));

pattern "[zZ]": return(checknext(c,"[hH]",label));

default: return(c);

}

}

}

/* process a chunk of dysfluent transcription */

function var processlabel(posn,size,label)

{

var posn;

var size;

string label;

string sym;

string plabel;

string wlabel;

var idx;

var dysfluent;

/* check valid label */

if (!compare(label,label)) return(0);

/* initialise */

sym=nextsymbol(label);

plabel="";

wlabel="";

dysfluent=0;

/* while symbols left */

while (compare(sym,"")!=0) {

if (index("[:/\({]",sym:1)) {

/* is word type or dysfluency mark-up */

wlabel=wlabel++" "++sym;

}

else if (index("[Q]",sym:1)) {

/* is pause - add to both tiers */

wlabel=wlabel++" "++sym;

plabel=plabel++" q";

}

else {

if (isupper(sym)) {

/* is dysfluent phone */

plabel=plabel++" "++tolower(sym);

dysfluent=1;

}

else {

/* is normal phone */

plabel=plabel++" "++sym;

}

}

sym=nextsymbol(label);

}

/* add dysfluent marker to word tier */

if (dysfluent!=0) wlabel = wlabel ++ " " ++ "{D}";

if (strlen(wlabel)>1) {

/* add word tier label */

sfssetfield(oanw,oanwcnt,0,posn);

sfssetfield(oanw,oanwcnt,1,size);

sfssetstring(oanw,oanwcnt,wlabel:2:strlen(wlabel));

oanwcnt=oanwcnt+1;

}

/* add something to phone tier */

if (strlen(plabel)==0) plabel=" q";

sfssetfield(oanp,oanpcnt,0,posn);

sfssetfield(oanp,oanpcnt,1,size);

sfssetstring(oanp,oanpcnt,plabel:2:strlen(plabel));

oanpcnt=oanpcnt+1;

}

/* for each input file */

main {

string anitemno;

var i,j,numf;

var posn,posn2,size;

string lab,lab2;

var eps,pause;

/* get input annotation set and made output annotation sets */

anitemno=str(selectitem(AN),4,2);

sfsgetitem(ian,$filename,anitemno);

numf=sfsgetparam(ian,"numframes");

sfsnewitem(oanp,AN,sfsgetparam(ian,"frameduration"),\

sfsgetparam(ian,"offset"),1,numf);

sfssetparamstring(oanp,"history",\

"sml("++anitemno++";script=anfluency.sml,type=phone)");

sfsnewitem(oanw,AN,sfsgetparam(ian,"frameduration"),\

sfsgetparam(ian,"offset"),1,numf);

sfssetparamstring(oanw,"history",\

"sml("++anitemno++";script=anfluency.sml,type=word)");

/* processing constants: small time (6ms) and pause time (100ms) */

eps = trunc(0.5 + 0.006 / sfsgetparam(ian,"frameduration"));

pause = trunc(0.5 + 0.1 / sfsgetparam(ian,"frameduration"));

/* process annotations */

oanwcnt=0;

oanpcnt=0;

for (i=0;i<numf;i=i+1) {

/* get input annotation */

lab = sfsgetstring(ian,i);

posn = sfsgetfield(ian,i,0);

size = sfsgetfield(ian,i,1);

/* check is not a redundant 'x' */

if ((i<numf-1)&&(compare(lab,"x")==0)) {

posn2 = sfsgetfield(ian,i+1,0);

if (posn2 > posn+eps) {

/* next annotation far off - insert / */

sfssetfield(oanp,oanpcnt,0,posn);

sfssetfield(oanp,oanpcnt,1,size);

if (size < pause) lab="q" else lab="...";

sfssetstring(oanp,oanpcnt,lab);

oanpcnt = oanpcnt+1;

/* also copy long pauses into word tier / */

if (size >= pause) {

sfssetfield(oanw,oanwcnt,0,posn);

sfssetfield(oanw,oanwcnt,1,size);

sfssetstring(oanw,oanwcnt,"...");

oanwcnt = oanwcnt+1;

}

}

}

else if (compare(lab:1,"x")==0) {

/* annotation starting with x - strip x */

if (strlen(lab)>1) processlabel(posn,size,lab:2:strlen(lab));

}

else {

/* normal annotation */

processlabel(posn,size,lab);

}

}

/* report processing */

print $filename,": processed ",numf:1," annotations into "

print oanwcnt:1," word and ",oanpcnt:1," phone annotations\n";

/* save results */

if (oanwcnt > 0) sfsputitem(oanw,$filename,oanwcnt);

if (oanpcnt > 0) sfsputitem(oanp,$filename,oanpcnt);

}

|

An example of the processing performed by the script can be seen in Figure 6.2.

Figure 6.2 - Example dysfluency mark-up divided across two tiers

Subsequent Processing

Phonetic alignment of phone tier

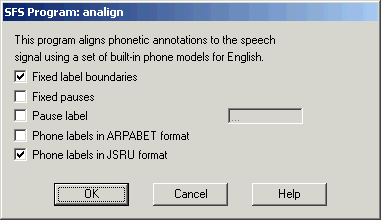

The automatic phonetic alignment of the phone tier can be performed using the tools describe in section 4. Selecting a suitable speech and annotation item, choose menu option Tools|Annotations|Auto align phone labels. For the phone tier annotations above we only want to align within a phone label and to use JSRU symbols. See Figure 6.3.

Figure 6.3 - Automatic phonetic alignment on JSRU symbols

Dysfluency statistics

Finally, we will show how the identification of dysfluencies in the word tier can be used to collect some statistics about their occurrence. This script counts where dysfluencies occurred and their typical duration.

/* dysstats.sml - measure some statistics about dysfluencies */

/* counts */

var ncontent; /* # in content words */

var nfunction; /* # in function words */

var npause; /* # after pause */

/* stats */

stat sdur; /* stats on duration */

/* for each input file */

main {

var num,i;

string last;

string lab;

num=numberof(".");

last="";

for (i=1;i<=num;i=i+1) {

lab=matchn(".",i);

if (index("{D}",lab)) {

if (index("/",lab)) {

nfunction=nfunction+1;

}

else if (index(":",lab)) {

ncontent=ncontent+1

}

if (index("Q",last)) {

npause=npause+1;

}

sdur += lengthn(".",i);

}

last=lab;

}

}

/* summarise */

summary {

print "Files processed : ",$filecount:1,"\n";

print "Number of dysfluencies : ",nfunction+ncontent:1,"\n";

print "Dysfluent function words : ",nfunction:1,"\n";

print "Dysfluent content words : ",ncontent:1,"\n";

print "Dysfluencies after pause : ",npause:1,"\n";

print "Mean dysfluent duration : ",sdur.mean," +/- ",sdur.stddev,"s\n";

}

|

An example run of the script on one 15min recording is shown below:

Files processed : 1 Number of dysfluencies : 28 Dysfluent function words : 18 Dysfluent content words : 10 Dysfluencies after pause : 7 Mean dysfluent duration : 0.4118 +/- 0.3442s |

Bibliography

- BEEP British English pronunciation dictionary at ftp://svr-ftp.eng.cam.ac.uk/pub/comp.speech/dictionaries/beep.tar.gz.

- Hidden Markov modelling toolkit at http://htk.eng.cam.ac.uk/.

- International Phonetic Alphabet at http://www.arts.gla.ac.uk/IPA/ipachart.html.

- SAMPA Phonetic Alphabet http://www.phon.ucl.ac.uk/home/sampa/.

- Speech Filing System at http://www.phon.ucl.ac.uk/resource/sfs/.

- UCL Psychology Department at http://www.psychol.ucl.ac.uk/.

- VoiScript at http://www.phon.ucl.ac.uk/resource/voiscript/.

Feedback

Please report errors in this tutorial to sfs@pals.ucl.ac.uk. Questions about the use of SFS can be posted to the SFS speech-tools mailing list.