10. Speakers and Accents

Key Concepts

- Speakers vary at all levels in the linguistic hierarchy – from physical form of vocal apparatus to linguistic habits.

- However, speakers are also highly variable in the way they use speech, so it is not always possible to identify or discriminate speakers from their voices.

- First language accents arise and change through the copying of innovations for social purposes.

- Second language accents are strongly influenced by the phonetic and phonological form of the speaker’s first language.

- Modern accent studies use experimental methods to look for changes in production and for limitations in perception.

Learning Objectives

At the end of this topic the student should be able to:

- describe some of the challenges in making robust and reliable speaker identification on the basis of acoustic measurements

- summarise the range of acoustic properties of speech that vary across speakers (for the same linguistic message)

- describe how speaker identification is performed within a forensic framework

- summarise the range of phonetic and phonological properties of speech that vary across accents

- outline the topics of concern in the study of second language accent

- outline some experimental methods used in the study of accent

Topics

- Speakers

It is a common experience that we can identify people we know from listening to the way they speak. More formally, some people you know have distinctive enough voices that in some situations their speech allows you to identify them. However you should not take this simple fact as meaning: (i) all speakers have unique voices, or (ii) pairs of speaker can always be told apart, or (iii) a given speaker is recognisable whatever they do. These are not valid conclusions that can be drawn from the observed facts. Unfortunately these fallacies have blighted the history of the field of speaker recognition.

Speaker Variation

First, let's consider the ways in which a person's identity could be reflected in their speech:

- Physical differences: The physical size of a person affects both the size of the larynx and the size of the vocal tract. Larynx size affects the range of fundamental frequencies produced during phonation, with larger larynges producing lower repetition rates. Vocal tract size affects the size of the acoustic cavities that give rise to formants, with larger vocal tracts giving rise to lower formant frequencies. Although larynx size and vocal tract size are two different aspects, they are often correlated, meaning that speakers with low-pitched voices also tend to have low frequency resonances. Larynx size changes at puberty, but with larger effects in men than women.

- Physiological differences: Speakers are also likely to vary in the anatomy of the vocal apparatus and its neural control. One person's vocal folds, for example, may vibrate more irregularly than another's; one person's respiratory system might deliver less air volume at lower pressures than another; or one person may have weaker articulatory muscles and so speak more slowly or less clearly. Neural control over the articulators may vary in terms of speed, accuracy or adaptability.

- Phonetic differences: Even with the same sized larynx, speakers may vary in their default pitch or voice quality. Speakers may use different habitual settings for jaw height, lip position or velum height. Speakers may also use different habitual gestures for the execution of phonological units: the exact position and timing of the tongue tip movement, say; or the degree of rounding and nasality used for particular segments. The speaker's accent will affect the exact quality of vowels and other segments, or the choice of allophonic variants in different contexts. Speakers may show differences in prosody: preferences for certain intonational tunes, speaking rate, rhythm or fluency.

- Phonological differences: Accent differences can also show up as changes to the inventory of phonological segments used in the lexicon, or to distributional differences in how the inventory is exploited in different groups of words. Speakers may even have idiosyncratic pronunciations of particular words.

- Lexical and syntactic differences: At lexical and higher linguistic levels, a speaker may show preferences for particular words, or preferences for certain word sequences, or preferences for certain syntactic constructions.

- Cognitive differences: Speakers as individuals may show preferences for certain topics of discussion, express certain attitudes to events in the world, be knowledgeable about certain fields, differ in intelligence or personality.

From this long but non-exhaustive list we note that (i) differences can be exhibited at all levels of linguistic description, and (ii) it is likely that any single characteristic will occur in many speakers (none are going to be unique to the speaker). For such potential sources of speaker information to be useful in establishing a speaker's identity it needs to be established that some combination of the factors are distinctive and reliably found in their speech.

Speaker Variability

Although there are many possible ways in which speakers may vary in their speech, we also have to take into account the fact that speech of a single speaker is itself highly variable:

- Content: changes to the linguistic content of a recording might affect the distribution of fundamental frequencies used, the range of spectral qualities and spectral dynamics.

- Speaking style: changes to the required intelligibility, familiarity of the interlocutor, or formality of the situation will affect the speech.

- Emotion: the nature of the emotional state of the speaker and their level of arousal will affect their speech.

- Health: the health of the speaker may change.

- Age: people's speech may vary as they get older.

- Acoustic environment and channel: the recording itself can be affected by noise, reverberation or audio channel.

- Deliberate disguise/imitation: the speaker may be acting, imitating another speaker or deliberately trying to disguise his voice.

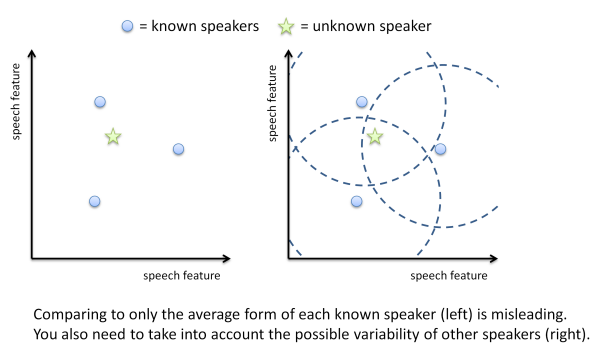

We must take into account the possibility of variability in a person's speech when we are trying to determine their identity: not just to allow their speech to vary from the average, but also to accommodate the possibility that someone else's speech might have given rise to the observed measurements. In the diagram below the speech of some unknown speaker (the star) could have been produced by a number of other speakers.

Acoustic Measurements

Experimental investigations of the relationship between speech signals and identity have focussed mainly on low-level acoustic properties. The use of higher linguistic levels is difficult in experimental work since they require much longer observations of the person - so as to establish their lexical habits for example. And in most applications, the amount of speech collected from a person is unlikely to be more than a few sentences.

Although you might have thought that source properties (pitch & voice quality) would be of most use in identifying speakers, in fact most work has looked at the statistical distribution of spectral qualities. Perhaps the reason for this is twofold: firstly that voice quality features tend to affect the spectral character of the voice anyway, and second that voice source features are hard to estimate accurately from the speech signal.

The distribution of spectral parameters describes the "space" of sounds employed by the speaker, and hence their habitual sound qualities used when speaking. In situations where you know what has been spoken by the person, these spectral qualities can also be indexed by the underlying phonological segment, which then provides access to the speaker's accent.

Forensic voice comparison

An important application of the phonetics of speaker differences is in criminal cases. This is called Forensic Phonetics.

Voice experts can be called upon to compare two or more speech recordings - one collected from the criminal and one collected from the suspect. The expert is asked his opinion as to whether it is possible (or likely) that the two recordings could have been made by the same speaker. Experts will use their own auditory phonetic judgements by listening for idiosyncratic characteristics like voice quality, speaking style and accent, often backed up by instrumental measurements of formant frequencies and prosody. See the tutorial on Forensic Phonetics in the readings.

Forensic Phonetics has a chequered history because of the claim made by some authorities that "Voiceprints" (i.e. spectrograms) are as accurate as fingerprints for identification. I hope the discussion above has convinced you that this is not the case. Just because you can choose between a few of your friends and relations from their voice does not mean that all speakers have unique voices, nor that speakers are recognisable in all circumstances. Forensic Phoneticians sometimes have to stand up in court and justify the procedures they have used and the conclusions they have drawn, it is beholden on them to be honest about the reliability and efficacy of their techniques. Unfortunately such high professional standards have not always been attained.

Forensic voice line-ups

Determining the identity of speakers from their voice is found in another forensic area. This is where the voice of the criminal was not recorded at the scene of the crime, but was heard by a witness to the crime (an earwitness). If the police have a suspect for the crime, they might present a recording of the suspect to the witness. If the police have a suspect for the crime, they might present a recording of the suspect to the witness. To try to avoid bias, this is usually done in the form of a voice line-up.

A voice line-up is like an identity parade; the voice of the suspect is included in a list of distractor voices collected from non-suspects. The witness to the crime listens to all the voices in random order then picks out the voice they remember from the line-up.

While apparently scientific, the voice line-up is not without problems. For example, how are the distractor voices chosen? What if the suspect has a distinctive accent or voice quality - should the distractors be chosen to have the same? Many experiments have shown that listeners have a very poor memory for the qualities of speaker voices, and the results of a voice line up need to be interpreted with care. A famous criminal case involving voice identification was the Lindbergh baby kidnapping case.

Voice disguise

Systems for disguising the voice are sometimes useful in legal cases where the identity of a witness needs to be hidden. Typically disguise uses pitch shifting and spectral warping tools developed for the music industry. These can change the apparent larynx size and vocal tract size of the speaker, and hide some aspects of voice quality. However they are not very effective at disguising accent. Testing the effectiveness of voice disguise and evaluating the performance of human listeners for speaker identification are active areas of research.

Automatic speaker recognition

Computer systems for identifying speakers from their voices, or for authenticating speakers from their voices can work quite well. See Application of the Week for more details.

- Accents

Languages, Dialects, Accents and Idiolects

These definitions have been adapted from Laver (1994):

- An accent is simply the manner of pronunciation of a speaker. Everyone, in this definition, speaks with an accent. An accent involves both phonological and phonetic aspects of pronunciation.

- A dialect refers to the types and meanings of the words available to a speaker and the range of grammatical patterns into which they can be combined. Dialects may be differentiated by their morphological, syntactic, lexical and semantic patterns and inventories. A dialect may be associated with more than one accent.

- A language is made up from a group of related dialects and their associated accents. How dialects are

grouped into languages can be geopolitical decision rather than a linguistic one.

An example of two similar dialects that are nevertheless called different languages is Norwegian and Swedish. It has been said that a language is just a dialect with an army and navy.

- An idiolect is the combination of dialect and accent for one particular speaker.

In the British Isles, the dominant dialect is called Standard English (the word 'Standard' here has the meaning 'everyday' rather than 'correct'). Standard English is widely understood in all English-speaking countries.

However the British Isles has many differentiable accents. Some are associated with particular regions of the country, such as Geordie, Scouse and Cockney. Other accents are non-regional, in that speakers of these accents can be found in all areas. The most well-known non-regional accent of the British Isles is Received Pronunciation (RP), although it is now spoken by a minority.

Why do accents arise and change?

Although the definitions above define accents in terms of the speech of individuals, it is clear that many phonological and phonetic features of accents are shared by groups of speakers. These groups might be regional, or based on social class, or education. A speaker may change his/her accent if he/she moves region or changes jobs; speakers may even modify their accents depending on who they are speaking to.

The study of accents, then, is influenced by social factors. The term sociophonetics has been coined to describe the area of phonetics related to how sociological factors affect pronunciation. It can be considered part of the general study of sociolinguistics. See reading by Foulkes & Docherty for more detail.

We have two main questions to ask: how do the sound changes that differentiate accents arise, and why are these changes adopted by some sub-population of speakers?

It has been suggested that innovations in pronunciation come from two main sources: misperceptions and the introduction of novel sound varieties by outsiders. It is possible that sound qualities arising due to some contextual effects are misinterpreted by a new generation of speakers who then adopt a different underlying phonological description. At some point in the history of English, words like "laugh" and "tough" changed from a final [x] to a final [f]; possibly through a misperception of the underlying form. It has been suggested that "Black" English or "Asian" English may be the cause of accent innovation in multi-ethnic communities. The use of "uptalk" (high-rising terminal) intonation in Britain has been blamed on Australian Soap Operas.

Given some innovation in pronunciation, why should that change be adopted by other speakers? It has been suggested that pronunciation variants can in themselves become social symbols and be used to demonstrate membership of a social group, whether that is a local gang or the educated elite. That accents aid in the identification of social groups is an example of ethnocentrism:

Ethnocentrism is a nearly universal syndrome of attitudes and behaviors. The attitudes include seeing one’s own group (the in-group) as virtuous and superior and an out-group as contemptible and inferior. The attitudes also include seeing ones own standards of value as universal. The behaviors associated with ethnocentrism are cooperative relations with the in-group and absence of cooperative relations with the out-group. Membership in an ethnic group is typically evaluated in terms of one or more observable characteristics (such as language, accent, physical features, or religion) that are regarded as indicating common descent. Ethnocentrism has been implicated not only in ethnic conflict and war, but also consumer choice and voting. [R. Axelrod, R. Hammond, 2003].

Ways in which accents vary

Here are some ways in which accents have been observed to vary, with some examples drawn from English to aid exposition. It is assumed that accents vary in similar ways in all languages .

- Phonological Inventory: On a segmental level, the sound inventory used in the mental lexicon to represent the pronunciation of words can vary from speaker to speaker. For example most British English accents differentiate the words "cart", "cot" and "caught" using three different back open vowel qualities; however many American English accents use only two vowels in the three words (i.e. "cot" and "caught" become homophones). Similarly, older speakers of English may differentiate the word "poor" from the word "paw" through the use of a [ʊə] diphthong that is not used by younger speakers.

- Lexical Distribution: Even for the same phonological inventory, there may be distributional differences in terms of which segments are used in which words. For example, Northern and Southern varieties of British English differ in the selection of /ɑː/ and /æ/ in words like "bath", or differ in the selection of /ʌ/ and /ʊ/ in words like "strut". "Rhotic" accents articulate post-vocalic /r/ in words like "farm".

- Allophonic variation: variations can occur in terms of the choice and distribution of allophones, for example the use of clear and dark variants of /l/, the replacement of syllable-final /t/ with [ʔ], or the use of the labiodental approximant [ʋ] for /r/.

- Segmental quality: most clearly, accents vary in the precise articulatory implementation of the same phonemes, particularly vowels. The Northern Irish English realisation of the phoneme /aʊ/ is more like [ɑə] than the RP variant [ɐʊ]. Vowels not only vary in timbre, but can shift between monophthongs and diphthongs, the Geordie /əʊ/ can take the form [ɔː], or the London /i:/ as [ɪi].

- Prosody: variations also exist in prosody, both in term of syllable timing and in intonation. For example Southern English [baːd] vs Northern [bad], or the use of "uptalk", a rising intonation used in statements.

Generally accents are not so much different sounds as the same sounds in different places. The implication of this is that accents can be detected better from realisations of known utterances rather than from global properties of the speech.

Accents of the British Isles

This table lists the major accent variations in British English as given in Hughes, Trudgill & Watt, 2005:

Variation Example Vowel /ʌ/ merge "butter" as /bʌtə/, /bʊtə/ Vowels /æ/ and /ɑ/ "laugh" as /læf/, /lɑːf/ Vowels /ɪ/ and /i/ "city" as /sɪtɪ/, /sɪti/ Post vocalic /r/ "farm" as /fɑːm/, /fɑːrm/ Vowels /ʊ/ and /uː/ "pull" as /pʊl/, /puːl/ /h/ dropping "harm" as /hɑːm/, /ɑːm/ Glottal stop [ʔ] "button" as [bʌtən], [bʌʔən] Velar nasal /ŋ/ "walking" as /wɔːkɪŋ/, /wɔːkɪn/ /j/ dropping "news" as /njuːz/, /nuːz/ lonɡ mid diphthonɡs "boat" as [bəʊt], [bɔːt]

Second Language Accent

Another significant area of research is related to the accent of non-native speakers of the language. The phonetic and phonological properties of a speaker's first language may influence their pronunciation of a second language, particularly if that is learned later in life. This topic was raised in Week 6 research paper of the week.

In this area of study, open questions include: whether it is possible to predict which sounds will be problematic knowing the phonology of the two languages, whether the problems arise from problems in perception, and whether an ability to learn a native-like pronunciation worsens with age.

Particularly well known examples of second-language accent problems include the difficulty of Japanese speakers to differentiate English /l/ and /r/ (perhaps because in Japanese these are heard as two allophones of the same phoneme). Conversely, English speakers may use a retroflex alveolar approximant [ɹ] for the French uvular approximant [ʁ̞].

Experimental Methods

Historically, much work in accents has been undertaken by phoneticians with an ear for the varieties that people use. To identify what accents exist and their distribution across regions and social classes, a common approach would be to count the frequency of pronunciation variants as a function of indexical variables. For example, the sociolinguist Labov (1966) studied the frequency of use of post-vocalic /r/ and the frequency of use of [d] for /ð/ in different socioeconomic groups in New York:

% use postvocalic /r/ /ð/ as [d] Middle class 25 17 Working class 13 45 Lower class 11 56 The availability of recorded speech materials has seen a shift towards instrumental measures of accent. Efforts have been concentrated on vowel formant frequencies, not only because vowels vary a great deal across accents, but also because vowels are easy to measure. Since we want to study accents and not individuals, it is important to find a way to normalise formant frequencies before they can be compared across speakers. To ensure that measurements are comparable, the use of standardised materials (word/sentence lists) is essential. The Accents of the British Isles (ABI) corpus, and the Intonational varieties of English (IViE) corpus are good sources of materials for British English.

Readings

Essential

- V. Dellwo, M. Huckvale, M. Ashby, How is individuality expressed in voice? An introduction to speech production & description for speaker classification, in Speaker Classification I, C.Mueller (ed), Springer Lecture Notes in Artificial Intelligence, 2007. Section 4 is most relevant.

- Anders Eriksson, Tutorial on Forensic Speech Science.

Background

- F. Nolan, The Phonetic Bases of Speaker Recognition, Cambridge University Press, 2009. [available in library]

- P.Foulkes, G. Docherty, The social life of phonetics and phonology, Journal of Phonetics 34 (2006) 409438.

Research paper of the week

Clustering Speakers into Accents

- Huckvale, M., "Hierarchical clustering of speakers into accents with the ACCDIST metric", International Congress of Phonetic Sciences, Saarbrücken, Germany, 2007.

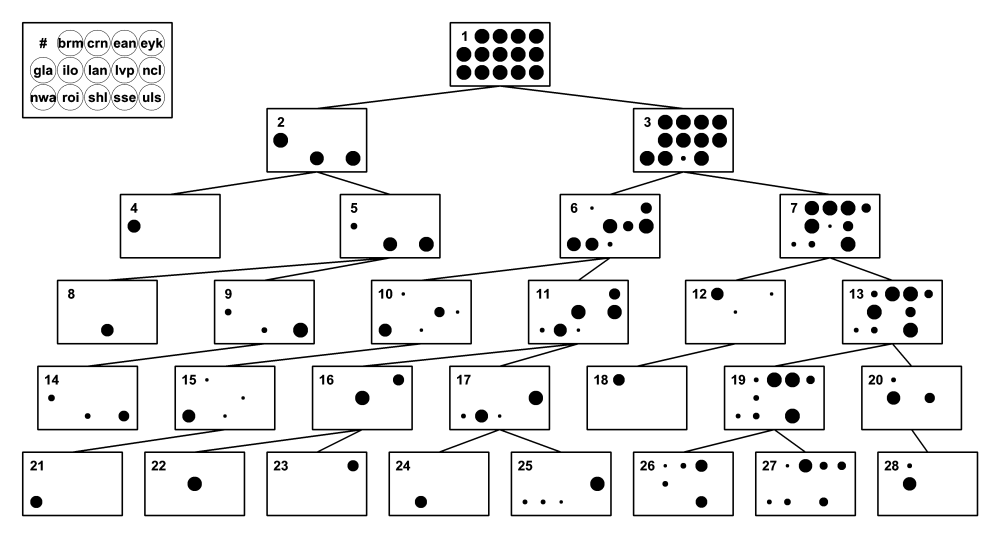

This paper describes an experiment in which 275 speakers are clustered according to patterns found in their pronunciation of 20 sentences. The objectives of the study were to explore whether conventional "accents" could be uncovered automatically as clusters of similar sounding speakers and to discover whether the similarities between accents uncovered by clustering reflect social geography.

Speakers were chosen from the Accents of the British Isles corpus. Approximately 10 male and 10 female speakers were used from 14 accent areas. Acoustic analysis was performed only on the vowels in 20 read sentences; the vowels were represented using coefficients computed from the spectral envelope of each vowel. The similarity of vowel pronunciations between each pair of speakers was computed using a metric called ACCDIST (Huckvale, 2004). This method has the nice property of not requiring the data to be normalised before comparisons are made across speakers. This is because it uses the relative similarities of vowels within the speaker as the main descriptive parameters.

The pairwise similarities of pronunciation are then used for hierarchical clustering. In this process, every speaker is put into a group of one speaker. Then the most similar groups are merged one at a time, so that eventually all speakers are merged into a single group. The ordering of the merges provides information about the hierarchical structure in the similarities. The results of hierarchical clustering are often displayed in the form of a dendrogram, see figure below.

Agglomerative hierarchical clustering of 275 speakers analysed by originating accent group. The figure shows the top levels of a tree of 549 nodes. The area of the disks represents the proportion of the accent group members present in the node. For clarity, nodes which contain fewer than 10 speakers, or whose parent node consists entirely of speakers of one accent have been pruned. Accents codes are: brm=Birmingham, lvp=Liverpool, crn=Cornwall, ncl=Newcastle, ean=East Anglia, nwa=North Wales, eyk=East Yorkshire, roi=Dublin, gla=Glasgow, shl=Scottish Highlands, ilo=Inner London, sse=South East, lan=Lancashire, uls=Ulster.

The results of clustering may then be examined for socio-phonetic effects. In the figure above, for example, node 2 contains the Scottish accents, while node 6 contains predominantly Northern and node 7 predominantly Southern English accents. The clustering also shows interesting socio-phonetic effects, for example node 17 includes both Irish and Newcastle accents, while 20 combines Inner London with Liverpool.

Application of the Week

This week's application of phonetics is the construction of systems that recognise the identity of a person from their voice: speaker recognition systems.

Automatic systems for recognising speakers are of two types: speaker identification systems select one speaker from N known speakers on the basis of their voice; speaker verification systems are given the claimed identity of the speaker and must confirm that identity on the basis of voice. The latter type are more widely employed at the present time, for authenticating access to buildings or bank accounts for example.

In a typical speaker recognition system, speakers enrol by recording some known sentences - say a few minutes of speech in total. These recordings are processed into a series of spectral slices (at say 100/sec) and the spectral slices encoded in terms of a small number of features (say 1020 numbers per spectrum). A commonly used form of acoustic features are called cepstral coefficients. From this large collection of encoded spectra a statistical model is generated to describe the range of speech sounds produced by the speaker on average. You can think of this model as a collection of spectral means and variances, where each mean represents one "cluster" of similar sounds. The technical term for this is a Gaussian Mixture Model (GMM).

In a speaker identification application, a recording of the unknown speaker's voice is processed into spectral features and compared to each of the enrolled models in turn. The statistical model allows the calculation of the probability that the observed speech could have been generated by that speaker. The enrolled speaker model that probability that the observed speech could have been generated by that speaker. The enrolled speaker model that was most likely to have generated the observed spectra identifies the chosen speaker.

In a speaker verification application, a speaker provides both a recording and the name of the person he/she claims to be. The analysed recording can be run through the statistical model of the claimed speaker to find out how likely that speaker would have spoken that speech. However this is not sufficient information for verification - we do not know how likely it is that the speech could have spoken by someone else. So in verification we also need a population or background model which is generated from statistical analysis of all the enrolled speakers. Only if the probability that the speech is generated by the claimed speaker is much bigger than the probability that it could have been generated by anyone is the claimed speaker verified.

The technology for speaker authentication is well developed and is quite robust, with error rates around 1% in laboratory tests (i.e. 1% false rejects and 1% false acceptances). This is likely to be much better than humans can achieve. Systems can be divided into text-dependent and text-independent systems, the former being of better performance. One critical factor affecting performance is the amount of speech audio available during enrolment and recognition. The longer someone talks, the better the model of their voice that can be obtained.

Many factors can contribute to verification and identification errors:

- Misspoken or misread prompted phrases

- Extreme emotional states (e.g. stress or duress)

- Time varying (intra- or inter- session) microphone placement

- Poor or inconsistent room acoustics (e.g. reverberation and noise)

- Channel mismatch (e.g. using different microphones for enrolment and verification)

- Sickness (e.g., head colds can alter the vocal tract)

- Ageing (the vocal tract can drift away from models with age)

You can read more about the construction of speaker recognition systems from this tutorial chapter by Joseph Campbell.

Reflections

You can improve your learning by reflecting on your understanding. Check that you have answers to the questions below.

- Think of factors that might affect the reliability of earwitness evidence.

- What criteria should one use to choose "foils" for a voice line-up?

- Why might the performance of a speaker recognition system in the laboratory not be a good predictor for its performance in the field?

- Why might a non-regional accent arise?

- What would happen if you compared speakers' accents using un-normalized vowel formant frequencies?

- Suggest reasons why accents change over time.

Word count: . Last modified: 15:00 09-Dec-2020.